centos7.9部署k8s的几种方式

- 一、常见的k8s部署方式

- 二、使用kubeadm工具部署

- 三、基于二进制文件部署

- 1、硬件准备(虚拟机)

- 2、环境准备

- 3、部署etcd集群

- 4、所有节点安装Docker

- 5、部署master节点

- 6、部署kube-controller-manager

一、常见的k8s部署方式

1、使用kubeadm工具部署

kubeadm 是 Kubernetes 官方推荐的简化部署工具,能够快速初始化 Master 节点并加入 Worker 节点,自动化了很多设置过程。它还支持集群的升级和维护操作。这种方式相比手动部署更简便,但需要满足一定的硬件和软件环境要求。

2、基于二进制文件的部署方式

这种方式需要手动下载和安装 k8s 组件,包括 kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy 等组件,并手动配置参数和启动命令。适合需要自定义配置和精细控制的场景,但也需要花费更多的时间和精力进行维护和升级。

3、云服务提供商的托管 Kubernetes 服务

众多云服务提供商如 Amazon EKS、Microsoft AKS、Google GKE 等提供了托管 Kubernetes 服务。通过这些服务,用户可以快速创建和管理 Kubernetes 集群,无需关心底层基础设施的维护,同时还能享受到云平台的自动扩展、监控和安全服务等高级功能。

4、使用容器镜像部署或自动化部署工具

包括但不限于使用容器镜像部署 Kubernetes 组件,或是利用自动化部署工具如 Ansible、Terraform、Kubespray、Rancher 等。这些方法通过预配置的模板或剧本自动化集群的安装和配置过程,适用于追求部署效率和标准化的场景。例如,Kubespray 是一个使用 Ansible 来部署 Kubernetes 集群的工具,支持多种云平台和本地环境。

本文将从使用kubeadm工具部署、二进制文件部署、容器镜像部署三种方式部署k8s

二、使用kubeadm工具部署

1、硬件准备(虚拟主机)

| 角色 | 主机名 | ip地址 |

|---|---|---|

| master | k8s-master | 192.168.112.10 |

| node | k8s-node1 | 192.168.112.20 |

| node | k8s-node2 | 192.168.112.30 |

CentOS Linux release 7.9.2009 (Core)

至少2核CPU、3GB以上内存

使用命令hostnamectl set-hostname临时修改主机名

2、环境准备

2.1、所有机器关闭防火墙

|

1 2 3 |

systemctl stop firewalld #关闭 systemctl disable firewalld #开机不自启 systemctl status firewalld #查看状态 |

2.2、所有机器关闭selinux

|

1 |

sed -i 's/enforcing/disabled/' /etc/selinux/config setenforce 0 |

2.3、所有机器关闭swap

|

1 |

sed -i 's/enforcing/disabled/' /etc/selinux/config setenforce 0 |

2.4、所有机器上添加主机名与ip的对应关系

|

1 2 3 4 5 6 7 |

vim /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.112.10 k8s-master 192.168.112.20 k8s-node1 192.168.112.30 k8s-node2 |

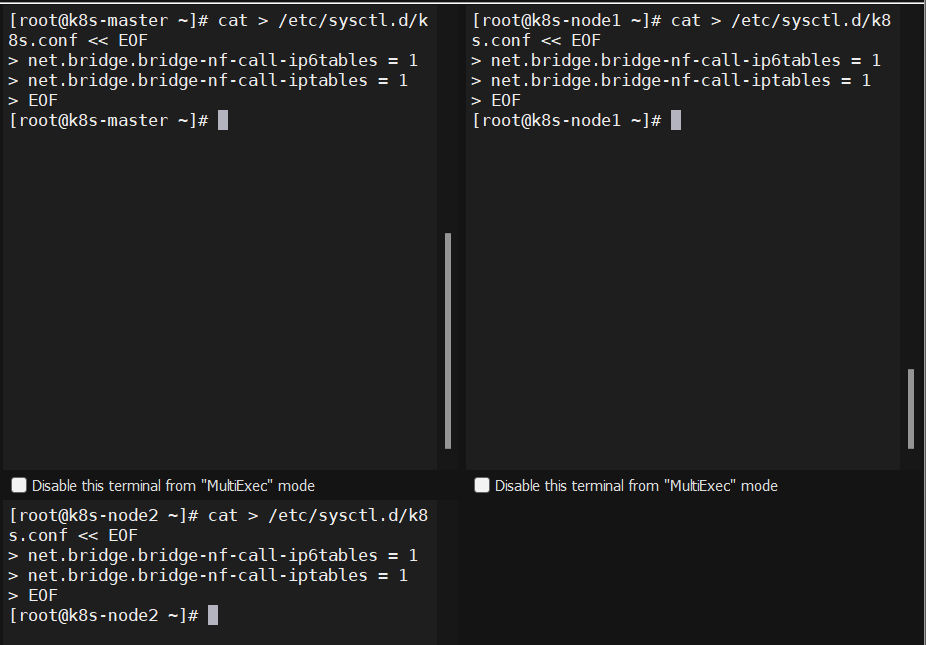

2.5、将所有主机上桥接的ipv4流量传递到iptables链

|

1 2 3 4 5 6 |

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system # 生效 |

2.6、时间同步

|

1 2 |

yum install -y ntpdate ntpdate time.windows.com |

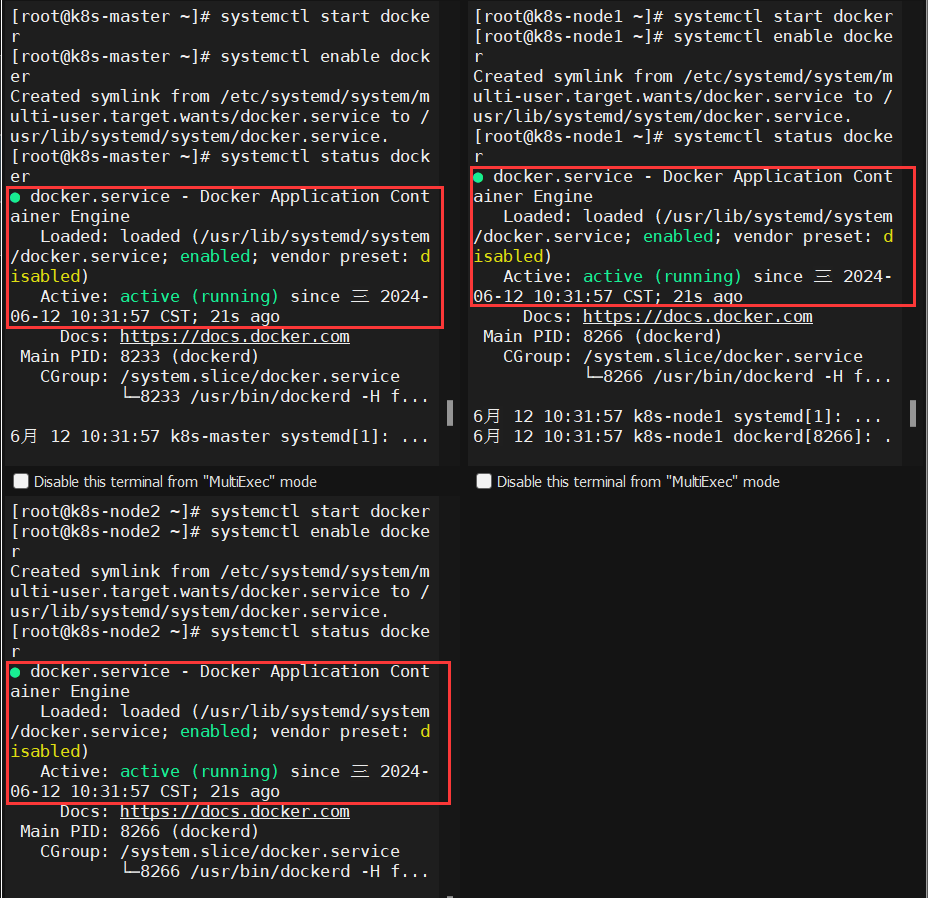

3、为所有节点安装docker

|

1 2 3 4 5 6 7 |

yum install wget.x86_64 -y rm -rf /etc/yum.repos.d/* wget -O /etc/yum.repos.d/centos7.repo http://mirrors.aliyun.com/repo/Centos-7.repo wget -O /etc/yum.repos.d/epel-7.repo http://mirrors.aliyun.com/repo/epel-7.repo wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum install docker-ce -y systemctl start docker && systemctl enable docker |

3.1、配置镜像下载加速器

|

1 2 3 4 5 6 7 8 9 |

cat > /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"], "registry-mirrors": ["https://jzjzrggd.mirror.aliyuncs.com"] } EOF [root@k8s-master ~]# systemctl daemon-reload [root@k8s-master ~]# systemctl restart docker.service |

4、集群部署

4.1、为所有节点修改从库,安装kubeadm、kubelet、kubectl

|

1 2 3 4 5 6 7 8 9 10 11 |

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF yum install kubelet-1.22.2 kubeadm-1.22.2 kubectl-1.22.2 -y systemctl enable kubelet && systemctl start kubelet |

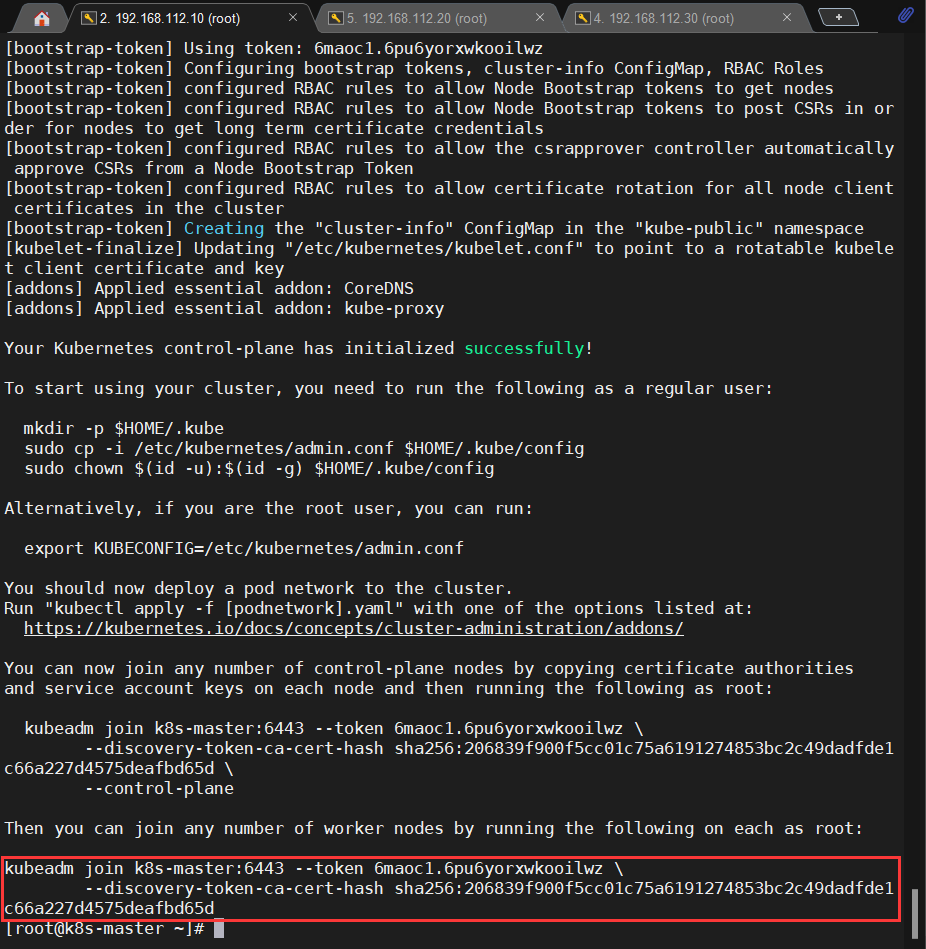

4.2、部署master节点

|

1 2 3 4 5 6 7 |

kubeadm init \ --apiserver-advertise-address=192.168.112.10 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.22.2 \ --control-plane-endpoint k8s-master \ --service-cidr=172.16.0.0/16 \ --pod-network-cidr=10.244.0.0/16 |

|

1 2 |

kubeadm join k8s-master:6443 --token 6maoc1.6pu6yorxwkooilwz \ --discovery-token-ca-cert-hash sha256:206839f900f5cc01c75a6191274853bc2c49dadfde1c66a227d4575deafbd65d |

记得保存好这段命令是用于将一个工作节点(worker node)加入到已存在的 Kubernetes 集群中的过程。

4.3.1、遇到报错

|

1 2 3 4 5 6 7 |

Here is one example how you may list all Kubernetes containers running in docker: - 'docker ps -a | grep kube | grep -v pause' Once you have found the failing container, you can inspect its logs with: - 'docker logs CONTAINERID' error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster To see the stack trace of this error execute with --v=5 or higher |

4.3.2、解决办法

|

1 2 |

rm -rf /etc/containerd/config.toml systemctl restart containerd |

4.4、按照指令执行

To start using your cluster, you need to run the following as a regular user

|

1 2 3 4 |

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config export KUBECONFIG=/etc/kubernetes/admin.conf |

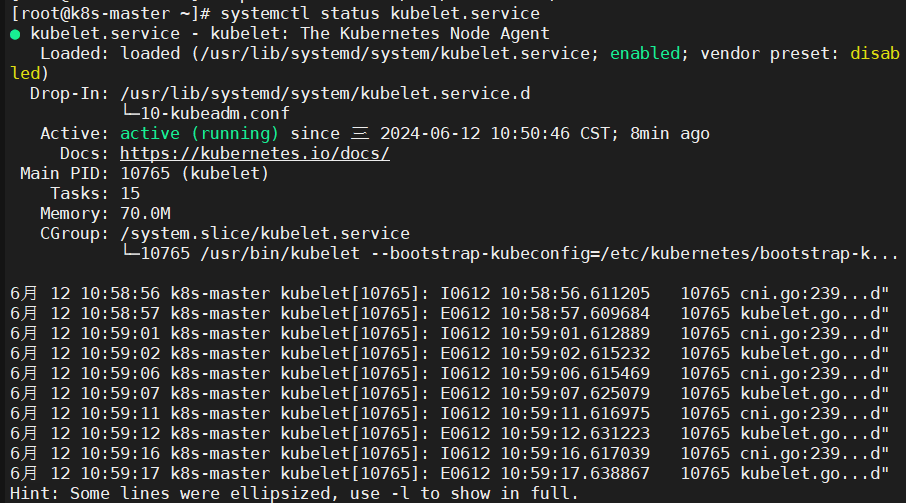

4.5、查看master节点kubelet.service状态

|

1 |

systemctl status kubelet.service |

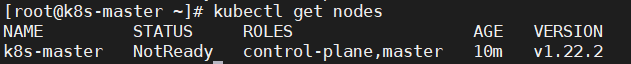

4.6、查看节点状态

由于网络插件还没有部署,还没有准备就绪 NotReady

|

1 2 3 |

[root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master NotReady control-plane,master 10m v1.22.2 |

5、安装网络插件

Flannel是K8s中的一个网络插件,它负责实现容器在集群中的网络通信。

|

1 2 3 4 |

# 最好手动提前拉取所需镜像 [root@k8s-master ~]# docker pull quay.io/coreos/flannel:v0.14.0 [root@k8s-master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml [root@k8s-master ~]# kubectl apply -f kube-flannel.yml |

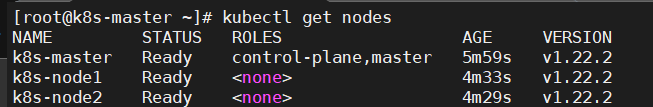

6、添加node节点

|

1 2 3 4 5 6 7 |

# 为node拉取网络插件镜像 [root@k8s-node1 ~]# docker pull quay.io/coreos/flannel:v0.14.0 [root@k8s-node2 ~]# docker pull quay.io/coreos/flannel:v0.14.0 [root@k8s-node1 ~]# kubeadm join k8s-master:6443 --token 6maoc1.6pu6yorxwkooilwz \ > --discovery-token-ca-cert-hash sha256:206839f900f5cc01c75a6191274853bc2c49dadfde1c66a227d4575deafbd65d [root@k8s-node2 ~]# kubeadm join k8s-master:6443 --token 6maoc1.6pu6yorxwkooilwz \ > --discovery-token-ca-cert-hash sha256:206839f900f5cc01c75a6191274853bc2c49dadfde1c66a227d4575deafbd65d |

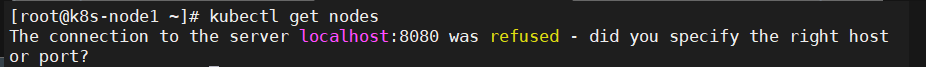

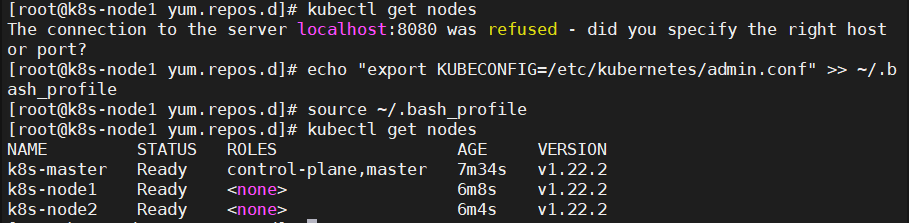

6.1、k8s集群中Node节点报错"The connection to the server localhost:8080 was refused – did you specify the right host or port?"

6.2、解决方案

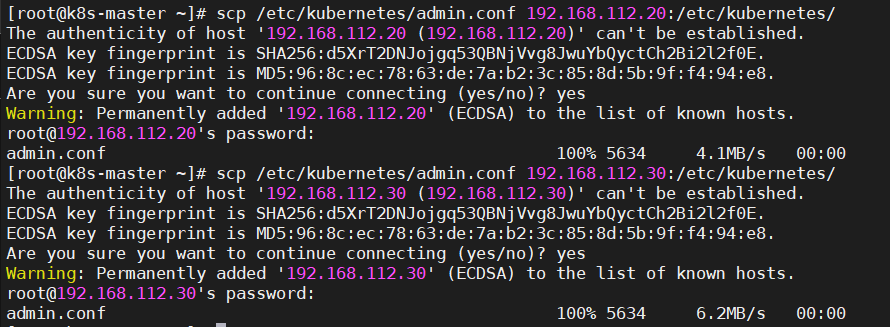

6.2.1、将Master节点上的"/etc/kubernetes/admin.conf"拷贝到Node上"/etc/kubernetes/"

Master节点上操作

|

1 2 |

[root@k8s-master ~]# scp /etc/kubernetes/admin.conf 192.168.112.20:/etc/kubernetes/ [root@k8s-master ~]# scp /etc/kubernetes/admin.conf 192.168.112.30:/etc/kubernetes/ |

6.2.2、在从节点上设置环境变量

Node节点操作

|

1 2 3 4 5 6 7 |

#Node1 [root@k8s-node1 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile [root@k8s-node1 ~]# source ~/.bash_profile #Node2 [root@k8s-node2 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile [root@k8s-node2 ~]# source ~/.bash_profile |

至此kubeadm工具部署k8s集群完成

三、基于二进制文件部署

1、硬件准备(虚拟机)

| 角色 | 主机名 | ip地址 | 组件 |

|---|---|---|---|

| master | k8s-master1 | 192.168.112.10 | kube-apiserver,kube-controller-manager,kube-scheduler,kubelet,kube-proxy,docker,etcd |

| node | k8s-node1 | 192.168.112.20 | kubelet,kube-proxy,docker,etcd |

| node | k8s-node2 | 192.168.112.30 | kubelet,kube-proxy,docker,etcd |

CentOS Linux release 7.9.2009 (Core)

至少2核CPU、3GB以上内存

使用命令hostnamectl set-hostname临时修改主机名

2、环境准备

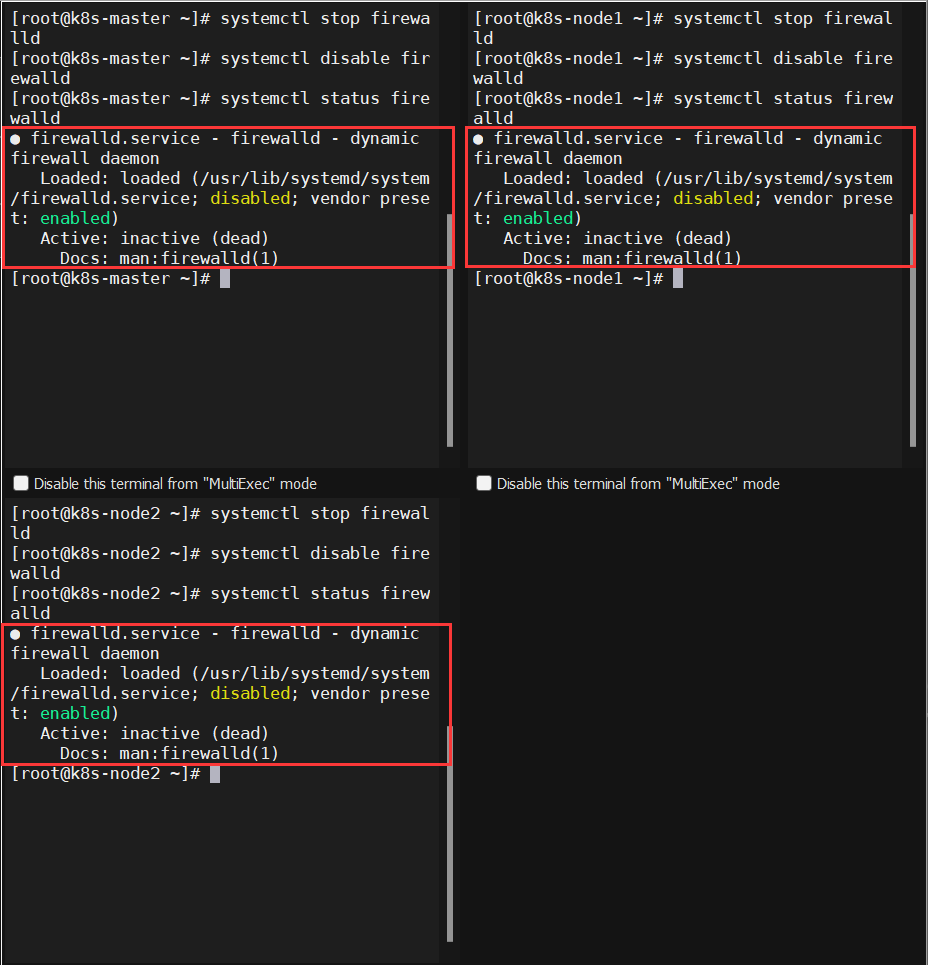

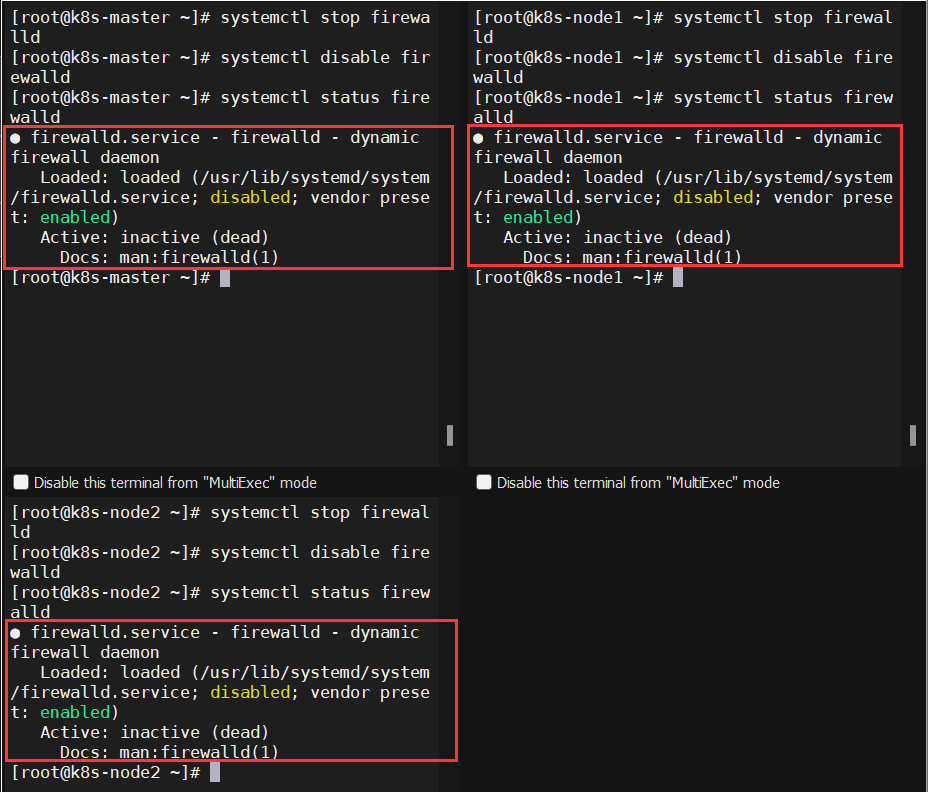

2.1、所有机器关闭防火墙

|

1 2 3 |

systemctl stop firewalld #关闭 systemctl disable firewalld #开机不自启 systemctl status firewalld #查看状态 |

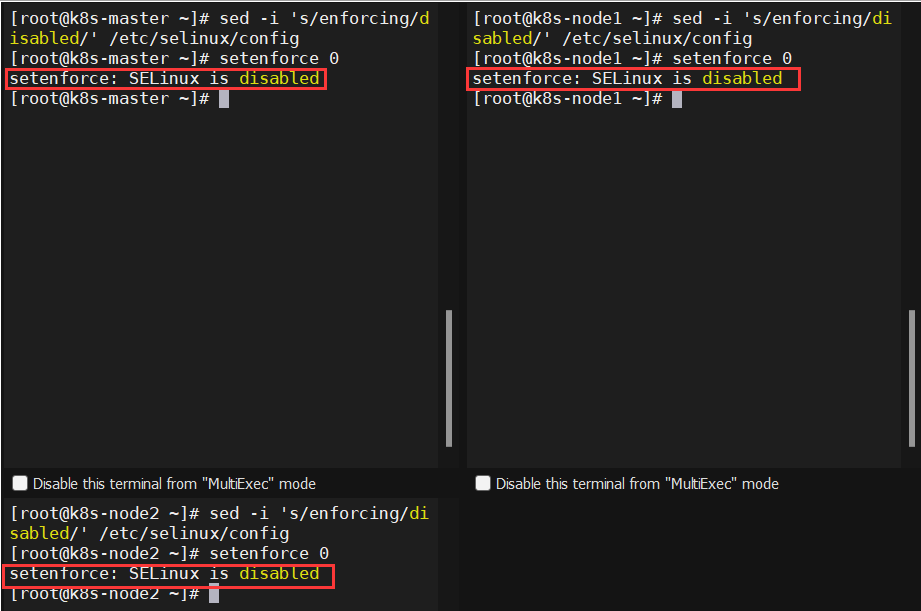

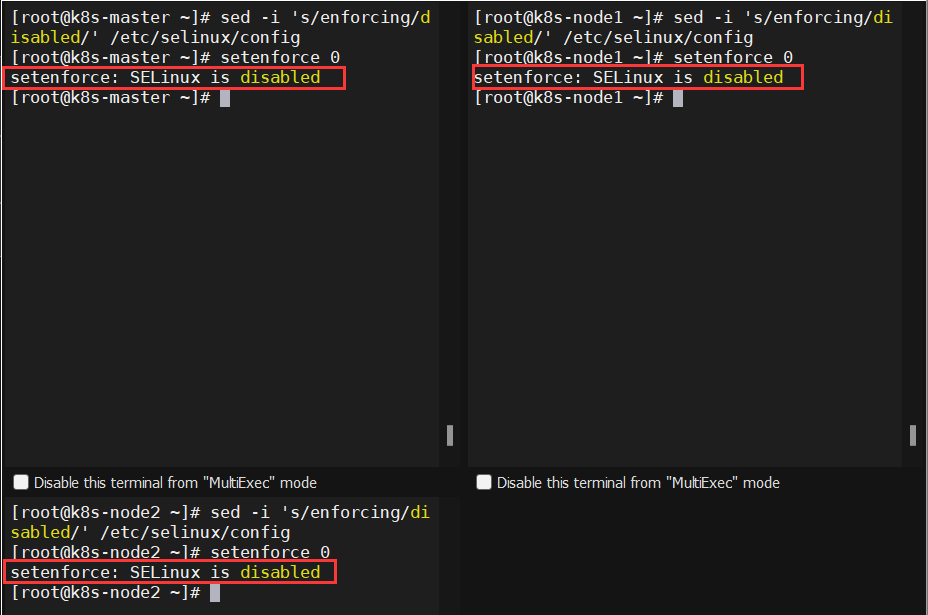

2.2、所有机器关闭selinux

|

1 2 |

sed -i 's/enforcing/disabled/' /etc/selinux/config setenforce 0 |

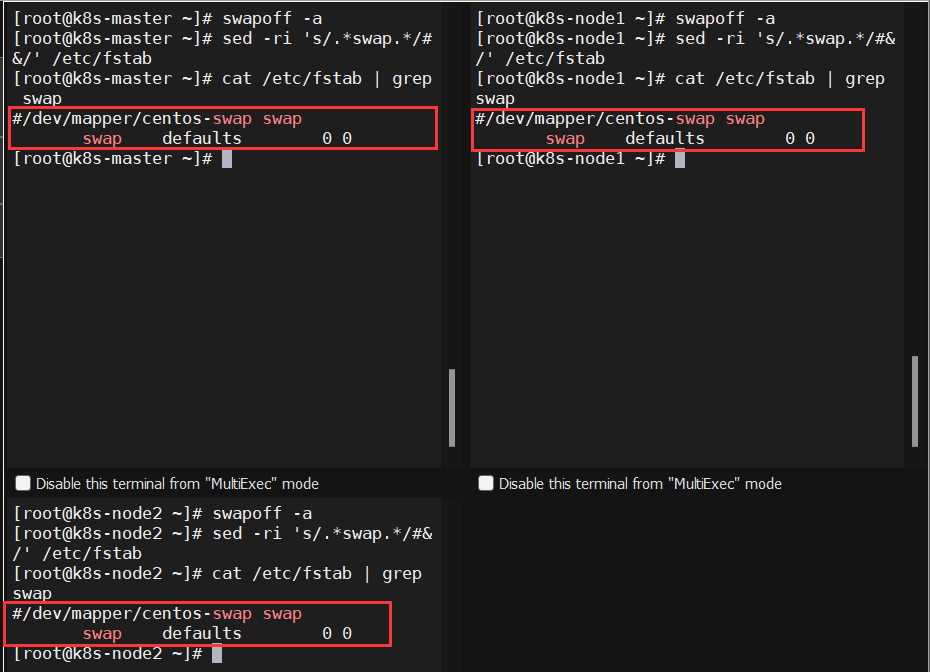

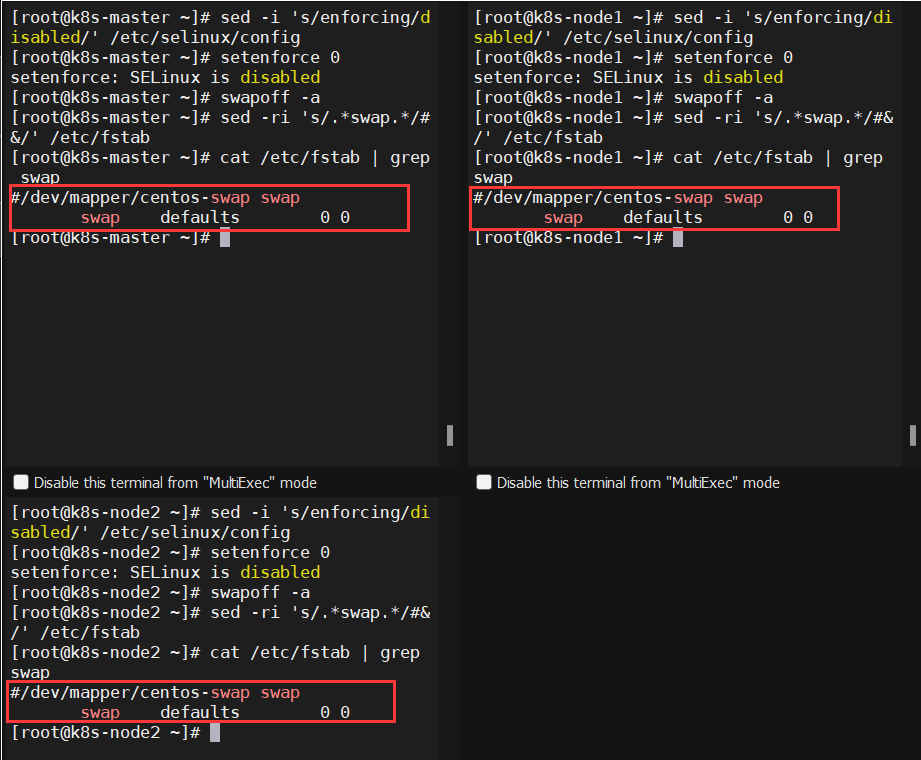

2.3、所有机器关闭swap

|

1 2 |

swapoff -a # 临时关闭 sed -ri 's/.*swap.*/#&/' /etc/fstab #永久关闭 |

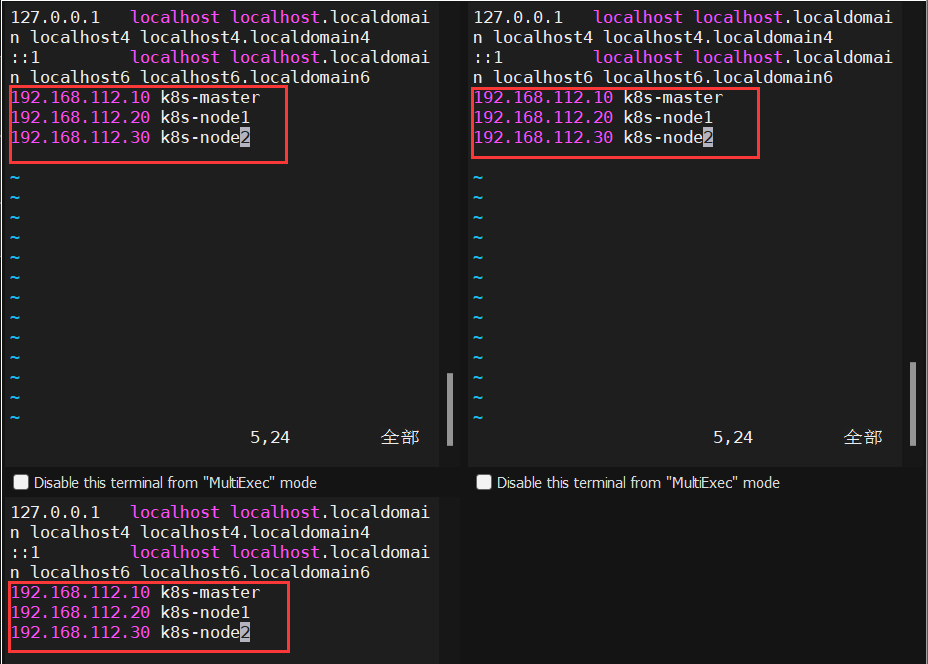

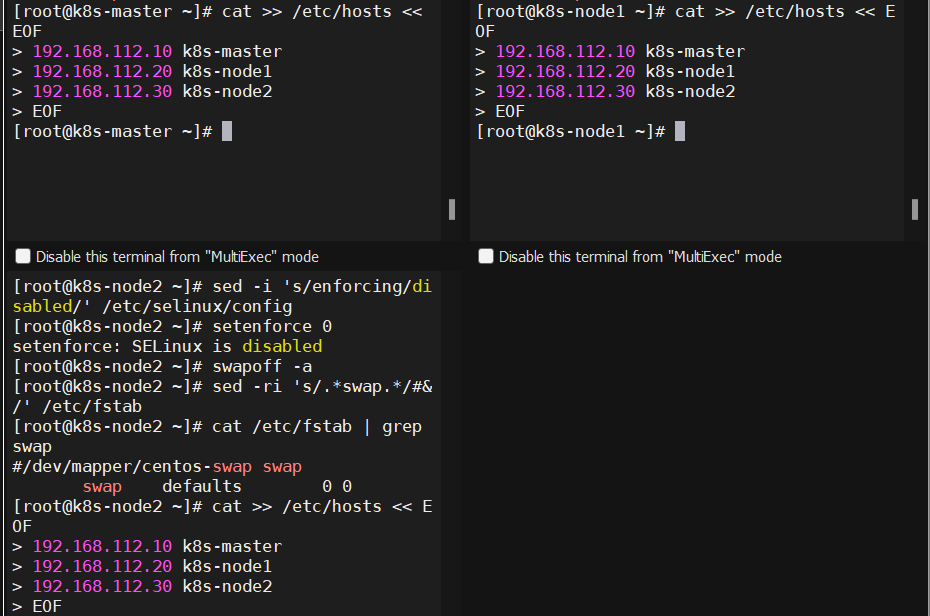

2.4、所有机器上添加主机名与ip的对应关系

|

1 2 3 4 5 |

cat >> /etc/hosts << EOF 192.168.112.10 k8s-master 192.168.112.20 k8s-node1 192.168.112.30 k8s-node2 EOF |

2.5、将所有主机上桥接的ipv4流量传递到iptables链

|

1 2 3 4 5 6 |

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system #失效 |

2.6、时间同步

|

1 2 |

yum install -y ntpdate ntpdate time.windows.com |

3、部署etcd集群

3.1、etcd简介

etcd是CoreOS开发的一个分布式Key-Value存储系统,内部采用 raft 协议作为一致性算法,用于可靠、快速地保存关键数据,并提供访问、共享配置和服务发现。通过分布式锁、leader选举和写屏障(write barriers),来实现可靠的分布式协作。Kubernetes使用ETCD存储所有集群数据,包括Pods、服务、服务发现等。

etcd 服务作为 Kubernetes 集群的主数据库,在安装 Kubernetes 各服务之前需要首先安装和启动。

3.2、对应节点规划

| 节点名称 | ip地址 |

|---|---|

| etcd-1 | 192.168.112.10 |

| etcd-2 | 192.168.112.20 |

| etcd-3 | 192.168.112.30 |

etcd可以与k8s节点复用,也可以部署在k8s机器外

只要apiserver可以连接到即可

3.3、cfssl证书生成工具准备

3.3.1、创建存放cfssl工具的目录

cfssl 是一个开源的证书管理工具,使用 json 文件生成证书。

master节点执行

|

1 2 |

mkdir /software-cfssl #创建目录存放cfssl工具 |

3.3.2、下载cfssl工具

|

1 2 3 |

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -P /software-cfssl/ --no-check-certificate wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -P /software-cfssl/ --no-check-certificate wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -P /software-cfssl/ --no-check-certificate |

3.3.3、给cfssl相关工具赋可执行权限,并复制到对应目录下

|

1 2 3 4 5 |

cd /software-cfssl/ chmod +x * cp cfssl_linux-amd64 /usr/local/bin/cfssl cp cfssljson_linux-amd64 /usr/local/bin/cfssljson cp cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo |

3.4、自签证书颁发机构(CA)

3.4.1、创建工作目录

master节点执行

|

1 2 |

mkdir -p ~/TLS/{etcd,k8s} cd ~/TLS/etcd/ |

3.4.2、生成自签证书CA配置

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

cat > ca-config.json << EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF |

1、ca-config.json # 可以定义多个profiles,分别指定不同的过期时间、使用场景等参数,后续在签名证书时使用某个profile参数配置服务证书的有效期。

2、signing # 表示该证书可用于签名其它证书,生成的ca.pem证书中CA=TRUE。

3、server auth # 表示client可以用该CA对server提供的证书进行验证。

4、client auth # 表示 server 可以用该CA对client提供的证书进行验证。

3.4.3、自签CA证书签名请求文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

cat > ca-csr.json << EOF { "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing" } ] } EOF |

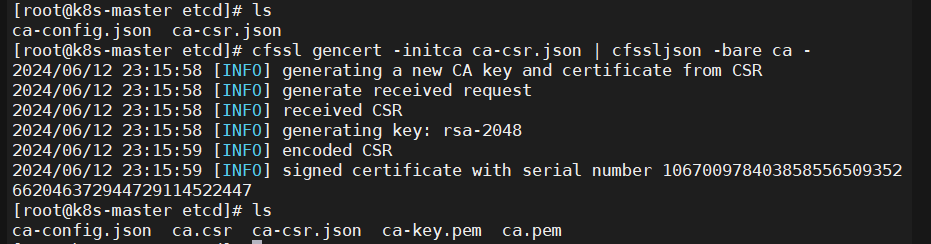

3.4.4、生成自签CA证书和私钥

|

1 |

cfssl gencert -initca ca-csr.json | cfssljson -bare ca - |

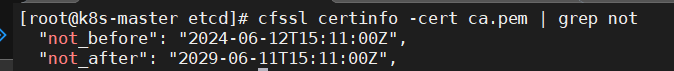

3.4.5、验证CA证书ca.pem

ca证书的有效期是5年

|

1 |

cfssl certinfo -cert ca.pem | grep not |

3.4.6、验证有效期

3.5、使用自签CA签发etcd https证书

3.5.1、创建证书申请文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

cat > server-csr.json << EOF { "CN": "etcd", "hosts": [ "192.168.112.10", "192.168.112.20", "192.168.112.30" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing" } ] } EOF |

3.5.2、生成证书

|

1 |

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server |

3.5.3、查看当前生成的一些相关证书

|

1 2 3 |

[root@k8s-master etcd]# ls ca-config.json ca-csr.json ca.pem server-csr.json server.pem ca.csr ca-key.pem server.csr server-key.pem |

3.6、下载etcd二进制文件

|

1 |

wget https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz |

3.7、部署etcd集群

master节点操作

3.7.1、创建工作目录并解压二进制文件

|

1 2 3 4 |

mkdir -p /opt/etcd/{bin,cfg,ssl} mv -f /root/TLS/etcd/etcd-v3.4.9-linux-amd64.tar.gz ~ tar zxvf etcd-v3.4.9-linux-amd64.tar.gz mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/ |

3.7.2、创建etcd配置文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

cat > /opt/etcd/cfg/etcd.conf << EOF #[Member] ETCD_NAME="etcd-1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.112.10:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.112.10:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.112.10:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.112.10:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.112.10:2380,etcd-2=https://192.168.112.20:2380,etcd-3=https://192.168.112.30:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF |

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通讯监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIALCLUSTER_TOKEN:集群Token

ETCD_INITIALCLUSTER_STATE:加入集群的状态,new是新集群,existing表示加入已有集群

3.8、systemd管理etcd

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd.conf ExecStart=/opt/etcd/bin/etcd \ --cert-file=/opt/etcd/ssl/server.pem \ --key-file=/opt/etcd/ssl/server-key.pem \ --peer-cert-file=/opt/etcd/ssl/server.pem \ --peer-key-file=/opt/etcd/ssl/server-key.pem \ --trusted-ca-file=/opt/etcd/ssl/ca.pem \ --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \ --logger=zap Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF |

3.9、三个机器做ssh免密

|

1 2 3 4 |

ssh-keygen -t rsa ssh-copy-id -i ~/.ssh/id_rsa.pub k8s-master ssh-copy-id -i ~/.ssh/id_rsa.pub k8s-node1 ssh-copy-id -i ~/.ssh/id_rsa.pub k8s-node2 |

3.10、将master节点生成的文件拷贝到node1和node2上

|

1 2 3 4 5 6 7 |

#!/bin/bash cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl for i in {2..3} do scp -r /opt/etcd/ root@192.168.112.${i}0:/opt/ scp /usr/lib/systemd/system/etcd.service root@192.168.112.${i}0:/usr/lib/systemd/system done |

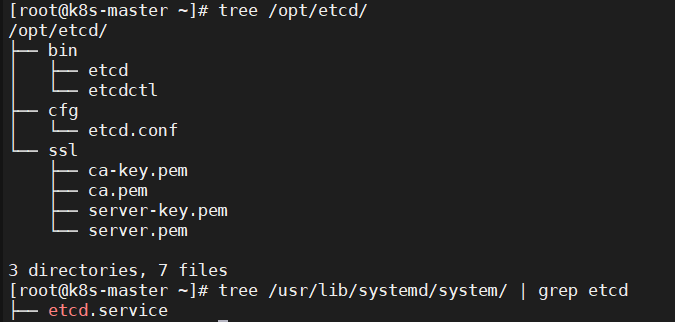

3.10.1、查看master节点的树形结构

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[root@k8s-master ~]# tree /opt/etcd/ /opt/etcd/ ├── bin │ ├── etcd │ └── etcdctl ├── cfg │ └── etcd.conf └── ssl ├── ca-key.pem ├── ca.pem ├── server-key.pem └── server.pem 3 directories, 7 files |

3.10.2、查看etcd服务是否存在

|

1 |

tree /usr/lib/systemd/system/ | grep etcd |

以上两个命令在所有节点都操作验证一下

3.11、修改node1和node2节点的etcd.conf配置文件

node1

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

vim /opt/etcd/cfg/etcd.conf #[Member] ETCD_NAME="etcd-2" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.112.20:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.112.20:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.112.20:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.112.20:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.112.10:2380,etcd-2=https://192.168.112.20:2380,etcd-3=https://192.168.112.30:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" |

node2

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

vim /opt/etcd/cfg/etcd.conf #[Member] ETCD_NAME="etcd-3" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.112.30:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.112.30:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.112.30:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.112.30:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.112.10:2380,etcd-2=https://192.168.112.20:2380,etcd-3=https://192.168.112.30:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" |

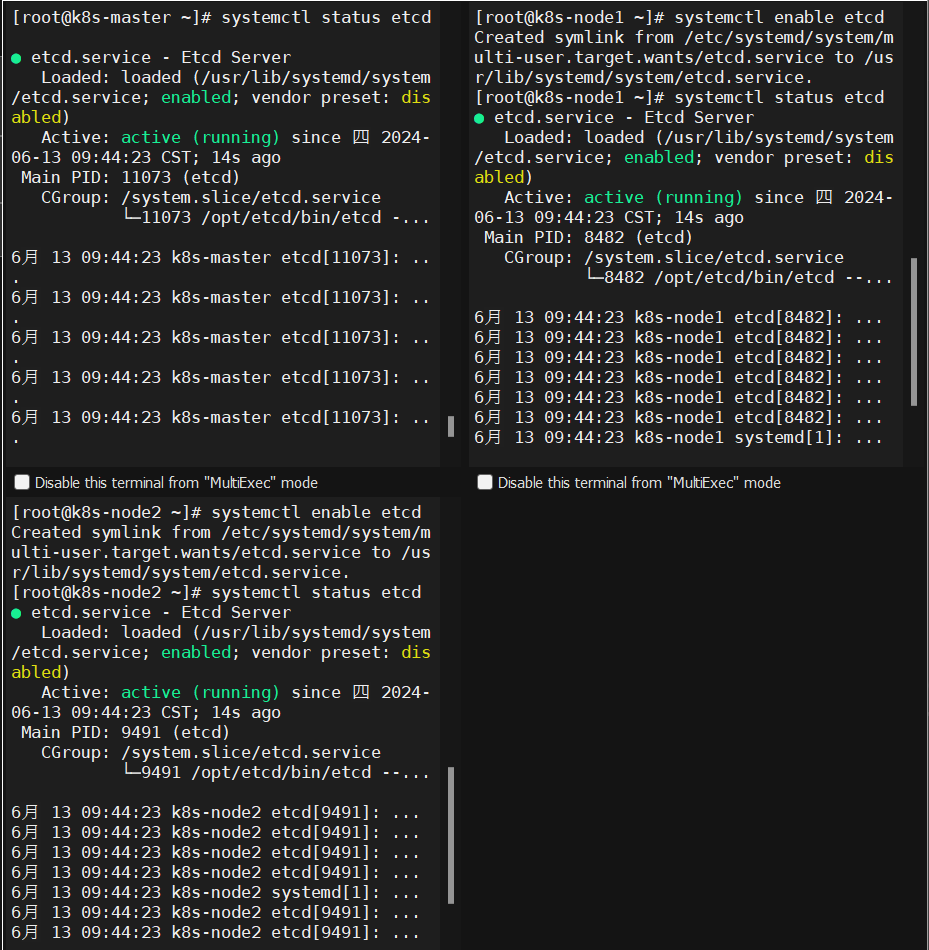

3.12、启动etcd并设置开机自启

etcd需要多个节点同时启动,单个启动会卡住。下面每个命令在三台服务器上同时执行。

|

1 2 3 4 |

systemctl daemon-reload systemctl start etcd systemctl enable etcd systemctl status etcd |

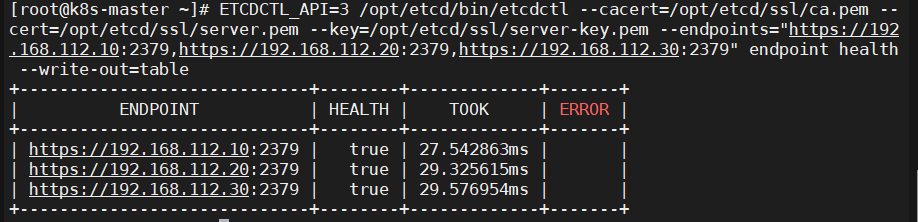

3.13、检查etcd集群状态

执行etcdctl cluster-health,验证etcd是否正确启动

master节点执行

|

1 |

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.112.10:2379,https://192.168.112.20:2379,https://192.168.112.30:2379" endpoint health --write-out=table |

3.13.1、报错解决

主要还是查看etcd日志,针对性排查

|

1 2 |

less /var/log/message journalctl -u etcd |

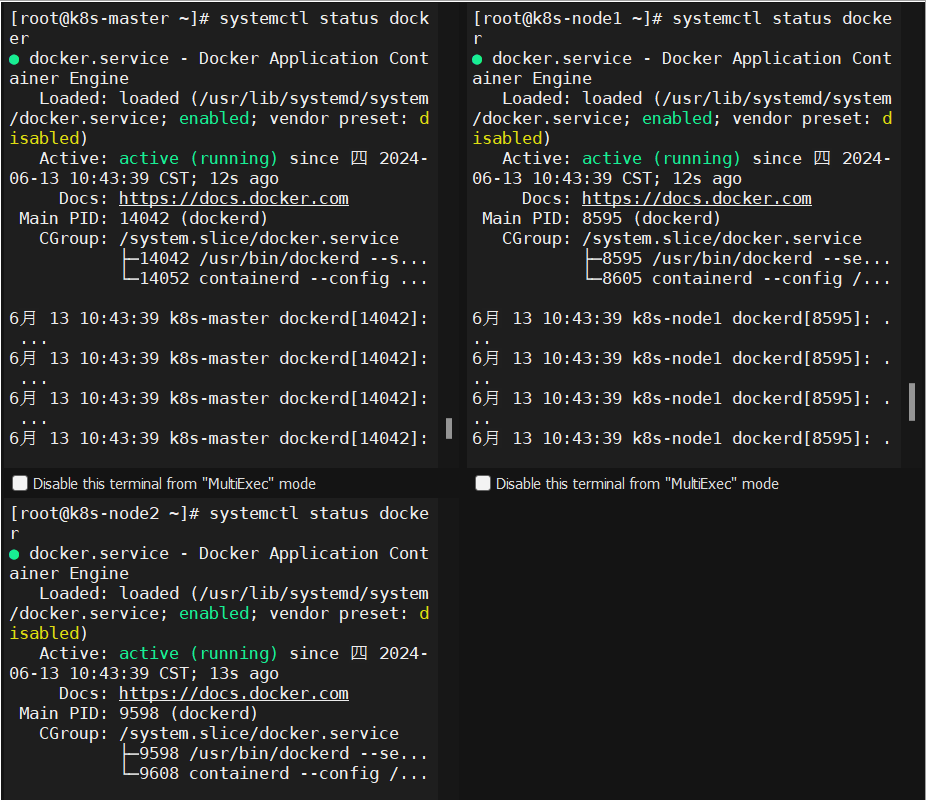

4、所有节点安装Docker

4.1、下载并解压二进制包

|

1 2 3 |

cd ~ wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz tar -zxvf docker-19.03.9.tgz | mv docker/* /usr/bin/ |

4.2、配置镜像加速

|

1 2 3 4 5 6 |

mkdir -p /etc/docker cat >> /etc/docker/daemon.json << EOF { "registry-mirrors": ["https://jzjzrggd.mirror.aliyuncs.com"] } EOF |

4.3、配置docker.service

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

cat > /usr/lib/systemd/system/docker.service << EOF [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify ExecStart=/usr/bin/dockerd --selinux-enabled=false --insecure-registry=127.0.0.1 ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity #TasksMax=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target EOF |

4.4、启动docker并设置开机自启

|

1 2 3 4 |

systemctl daemon-reload systemctl start docker systemctl enable docker systemctl status docker |

5、部署master节点

5.1、生成kube-apiserver

5.1.1、自签证书(CA)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

cd ~/TLS/k8s cat > ca-config.json << EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF |

5.1.2、自签CA证书签名请求文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

cat > ca-csr.json << EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" } ] } EOF |

5.1.3、生成证书

|

1 |

cfssl gencert -initca ca-csr.json | cfssljson -bare ca - |

5.1.4、查看生成的证书文件

|

1 2 |

[root@k8s-master k8s]# ls ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem |

5.1.5、创建证书申请文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

cat > server-csr.json << EOF { "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "192.168.112.10", "192.168.112.20", "192.168.112.30", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF |

5.1.6、生成证书

|

1 |

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server |

5.1.7、查看证书生成的文件

|

1 2 3 |

[root@k8s-master k8s]# ls ca-config.json ca-csr.json ca.pem server-csr.json server.pem ca.csr ca-key.pem server.csr server-key.pem |

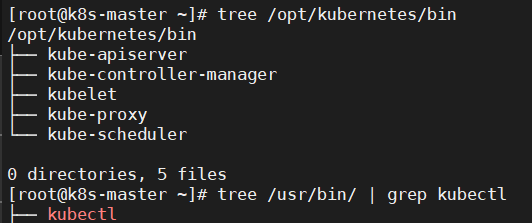

5.2、下载并解压二进制包

master节点操作

|

1 2 3 |

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs} wget https://storage.googleapis.com/kubernetes-release/release/v1.20.15/kubernetes-server-linux-amd64.tar.gz tar zxvf kubernetes-server-linux-amd64.tar.gz |

5.3、复制kube-apiserver、kube-controller-manager 和 kube-scheduler 到 /opt/kubernetes/bin 目录

|

1 2 3 |

cd kubernetes/server/bin cp kube-apiserver kube-scheduler kube-controller-manager kubelet kube-proxy /opt/kubernetes/bin cp kubectl /usr/bin/ |

5.4、检查复制情况

|

1 2 3 4 5 6 7 8 9 10 11 |

[root@k8s-master ~]# tree /opt/kubernetes/bin /opt/kubernetes/bin ├── kube-apiserver ├── kube-controller-manager ├── kubelet ├── kube-proxy └── kube-scheduler 0 directories, 5 files [root@k8s-master ~]# tree /usr/bin/ | grep kubectl ├── kubectl |

5.5、部署kube-apiserver

5.5.1、创建配置文件

master节点操作

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF KUBE_APISERVER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --etcd-servers=https://192.168.112.10:2379,https://192.168.112.20:2379,https://192.168.112.30:2379 \\ --bind-address=192.168.112.10 \\ --secure-port=6443 \\ --advertise-address=192.168.112.10 \\ --allow-privileged=true \\ --service-cluster-ip-range=10.0.0.0/24 \\ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\ --authorization-mode=RBAC,Node \\ --enable-bootstrap-token-auth=true \\ --token-auth-file=/opt/kubernetes/cfg/token.csv \\ --service-node-port-range=30000-32767 \\ --kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\ --kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\ --tls-cert-file=/opt/kubernetes/ssl/server.pem \\ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\ --client-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --service-account-issuer=api \\ --service-account-signing-key-file=/opt/kubernetes/ssl/server-key.pem \\ --etcd-cafile=/opt/etcd/ssl/ca.pem \\ --etcd-certfile=/opt/etcd/ssl/server.pem \\ --etcd-keyfile=/opt/etcd/ssl/server-key.pem \\ --requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \\ --proxy-client-cert-file=/opt/kubernetes/ssl/server.pem \\ --proxy-client-key-file=/opt/kubernetes/ssl/server-key.pem \\ --requestheader-allowed-names=kubernetes \\ --requestheader-extra-headers-prefix=X-Remote-Extra- \\ --requestheader-group-headers=X-Remote-Group \\ --requestheader-username-headers=X-Remote-User \\ --enable-aggregator-routing=true \\ --audit-log-maxage=30 \\ --audit-log-maxbackup=3 \\ --audit-log-maxsize=100 \\ --audit-log-path=/opt/kubernetes/logs/k8s-audit.log" EOF |

5.5.2、拷贝刚才生成的证书

|

1 |

cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/ |

5.2.3、创建配置文件的token文件

|

1 2 3 |

cat > /opt/kubernetes/cfg/token.csv << EOF 62ee59c473229084c6b57a19eec91e26,kubelet-bootstrap,10001,"system:node-bootstrapper" EOF |

token也可自行生成替换:

|

1 |

head -c 16 /dev/urandom | od -An -t x | tr -d ' ' |

5.2.4、systemd管理apiserver

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF |

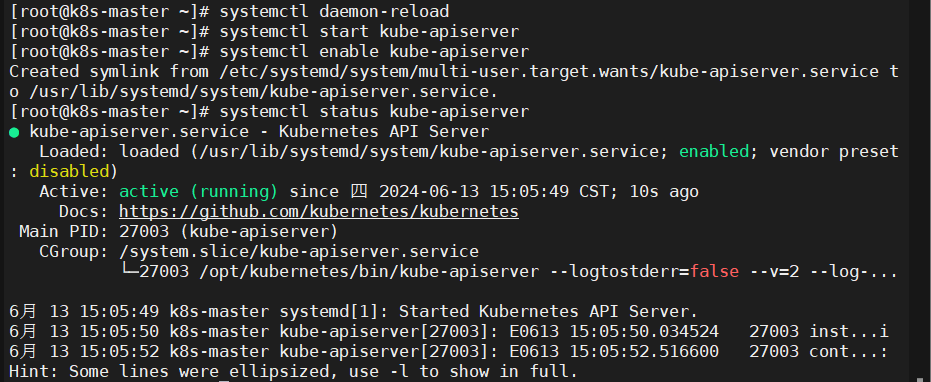

5.2.5、启动kube-apiserver并设置开机启动

|

1 2 3 4 |

systemctl daemon-reload systemctl start kube-apiserver systemctl enable kube-apiserver systemctl status kube-apiserver |

6、部署kube-controller-manager

6.1、创建配置文件

master节点操作

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --leader-elect=true \\ --kubeconfig=/opt/kubernetes/cfg/kube-controller-manager.kubeconfig \\ --bind-address=127.0.0.1 \\ --allocate-node-cidrs=true \\ --cluster-cidr=10.244.0.0/16 \\ --service-cluster-ip-range=10.0.0.0/24 \\ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --root-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --cluster-signing-duration=87600h0m0s" EOF |

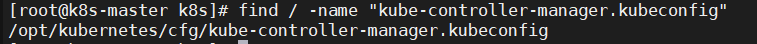

6.2、生成kubeconfig文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

[root@k8s-master1 ~]# cat /opt/kubernetes/cfg/kube-controller-manager.kubeconfig apiVersion: v1 clusters: - cluster: certificate-authority-data: LS0t server: https://192.168.112.10:6443 name: kubernetes contexts: - context: cluster: kubernetes user: kube-controller-manager name: default current-context: default kind: Config preferences: {} users: - name: kube-controller-manager user: client-certificate-data: LS0t client-key-data: LS0t |

6.3、创建证书请求文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

cd ~/TLS/k8s cat > kube-controller-manager-csr.json << EOF { "CN": "system:kube-controller-manager", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF |

6.4、生成kube-controller-manager证书

|

1 |

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager |

6.5、查看生成的证书文件

|

1 2 3 |

[root@k8s-master k8s]# ls kube-controller-manager* kube-controller-manager.csr kube-controller-manager-key.pem kube-controller-manager-csr.json kube-controller-manager.pem |

6.6、生成kubeconfig文件

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

KUBE_CONFIG="/opt/kubernetes/cfg/kube-controller-manager.kubeconfig" KUBE_APISERVER="https://192.168.112.10:6443" kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${KUBE_CONFIG} kubectl config set-credentials kube-controller-manager \ --client-certificate=./kube-controller-manager.pem \ --client-key=./kube-controller-manager-key.pem \ --embed-certs=true \ --kubeconfig=${KUBE_CONFIG} kubectl config set-context default \ --cluster=kubernetes \ --user=kube-controller-manager \ --kubeconfig=${KUBE_CONFIG} kubectl config use-context default --kubeconfig=${KUBE_CONFIG} |

6.7、systemd管理controller-manager

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes After=kube-apiserver.service Requires=kube-apiserver.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF |

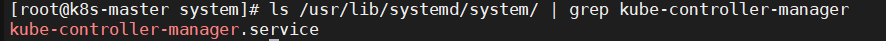

6.7.1、查看kube-controller-manager.service服务是否添加成功

|

1 |

ls /usr/lib/systemd/system/ | grep kube-controller-manager |

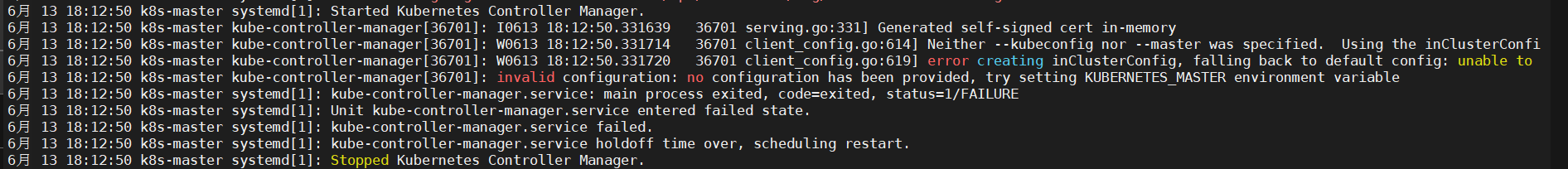

6.8、启动kube-controller-manager并设置开机自启

|

1 2 3 4 |

systemctl daemon-reload systemctl start kube-controller-manager systemctl enable kube-controller-manager systemctl status kube-controller-manager |

6.8.1、报错invalid configuration: no configuration has been provided, try setting KUBERNETES_MASTER environment variable

|

1 |

journalctl -u kube-controller-manager |

6.8.2、解决方案

暂时写到这,有空再解决